You can use the spider option under following scenarios: To do so, copy the line exactly from the schedule, and then add –spider option to check.

WGET USER AGENT DOWNLOAD

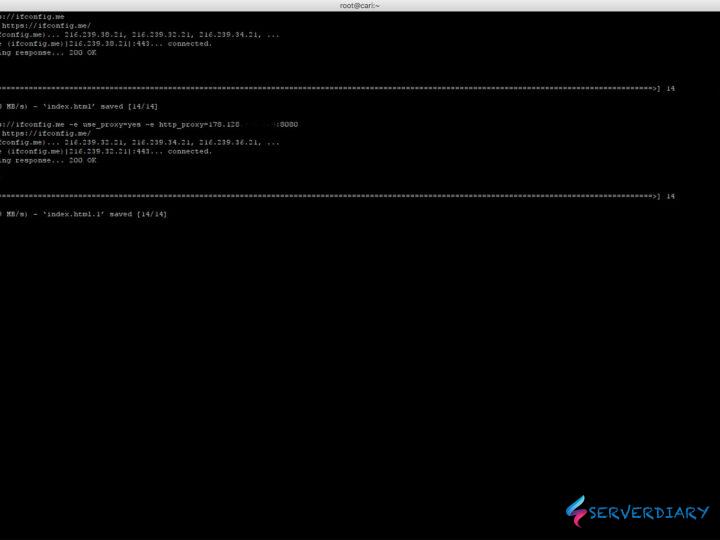

Test Download URL Using wget –spider : When you are going to do scheduled download, you should check whether download will happen fine or not at scheduled time. So you can mask the user agent by using –user-agent options and show wget like a browser as shown below. Mask User Agent and Display wget like Browser Using wget –user-agent :Some websites can disallow you to download its page by identifying that the user agent is not a browser. You can always check the status of the download using tail -f as : tail -f wget-log. 2 at the end.ĭownload in the Background Using wget -b : For a huge download, put the download in background using wget option -b as shown below.It will initiate the download and gives back the shell prompt to you.

1 already exist, it will download the file with. 1 to the filename automatically as a file with the previous name already exist. Instead of starting the whole download again, you can start the download from where it got interrupted using option -c Note: If a download is stopped in middle, when you restart the download again without the option -c, wget will append. This is very helpful when you have initiated a very big file download which got interrupted in the middle. So, to avoid that we can limit the download speed using the –limit-rate as shown below.In the following example, the download speed is limited to 200kĬontinue the Incomplete Download Using wget -c : Restart a download which got stopped in the middle using wget -c option as shown below. This might not be acceptable when you are downloading huge files on production servers.

WGET USER AGENT FULL

Specify Download Speed / Download Rate Using wget –limit-rate : While executing the wget, by default it will try to occupy full possible bandwidth. To correct this issue, we can specify the output file name using the -O option as:

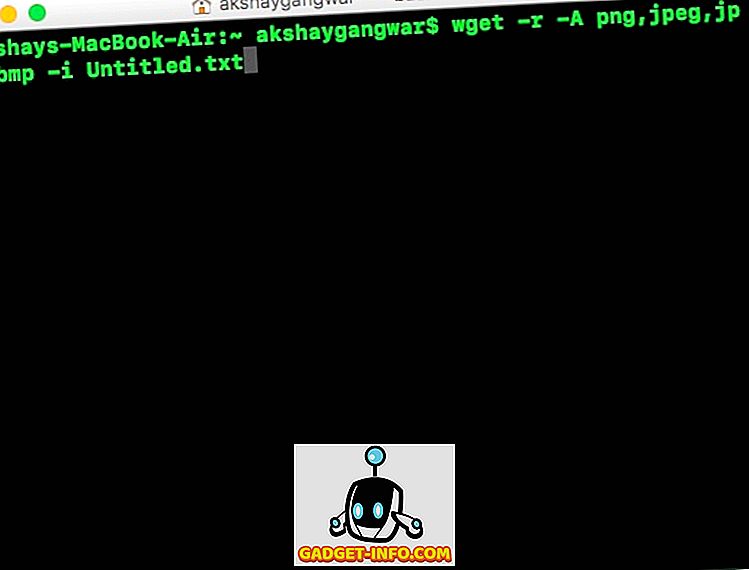

Wget will download and store the file with name: download_script.php?src_id=7701. The previous example downloads a single file from internet and stores in the current directory.While downloading it will show a progress bar with the following information: %age of download completion, total amount of bytes downloaded so far, current download speed and remaining time to downloadĭownload and Store With a Different File name Using wget -O :By default wget will pick the filename from the last word after last forward slash, which may not be appropriate always.Following example will download and store the file : Here are 15 examples of what you can do with Wget and a few dashes and letters in the part of the command. The magic in this little tool is the long menu of options available that make some really neat downloading tasks possible. The URL is the address of the file(s) you want Wget to download. Whether you want to mirror an entire web site, automatically download music or movies from a set of favorite weblogs, or transfer huge files painlessly on a slow or intermittent network connection, Wget’s for you.Wget, the “non-interactive network retriever,” is called at the command line. A versatile, old school Unix program called Wget is a highly hackable, handy little tool that can take care of all your downloading needs. Your browser does a good job of fetching web documents and displaying them, but there are times when you need an extra strength download manager to get those tougher HTTP jobs done. Just add "-span-hosts" to tell wget to go there, and "-D if you want to restrict spidering to that domain.The Ultimate Wget Download Guide With 15 Examples NovemPosted by Tournas Dimitrios in Linux. The pages linked to in the homepage were on a remote server, so wget would ignore them. Header = Accept: text/html,application/xhtml+xml,application/xml q=0.9,*/* q=0.8 I added those to wget.ini, to no avail: hsts=0 Since I have no problem accessing the rest of the site with a browser, I assume the "-e robots=off -U Mozilla" options aren't enough to have wget pretend it's a browser.Īre there other options I should know about? Does wget handle cookies by itself? Problems is, the site uses an htaccess file to block spiders, so the following command only downloads the homepage (index.html) and stops, although it does contain links to other pages: wget -mkEpnp -e robots=off -U Mozilla

I'd like to crawl a web site to build its sitemap.

0 kommentar(er)

0 kommentar(er)